Publications

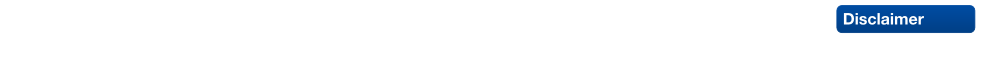

Probabilistic Labeling Cost for High-Accuracy Multi-view Reconstruction

In this paper, we propose a novel labeling cost for multiview reconstruction. Existing approaches use data terms with specific weaknesses that are vulnerable to common challenges, such as low-textured regions or specularities. Our new probabilistic method implicitly discards outliers and can be shown to become more exact the closer we get to the true object surface. Our approach achieves top results among all published methods on the Middlebury DINO SPARSE dataset and also delivers accurate results on several other datasets with widely varying challenges, for which it works in unchanged form.

Real-Time RGB-D based People Detection and Tracking for Mobile Robots and Head-Worn Cameras

We present a real-time RGB-D based multiperson detection and tracking system suitable for mobile robots and head-worn cameras. Our approach combines RGBD visual odometry estimation, region-of-interest processing, ground plane estimation, pedestrian detection, and multihypothesis tracking components into a robust vision system that runs at more than 20fps on a laptop. As object detection is the most expensive component in any such integration, we invest significant effort into taking maximum advantage of the available depth information. In particular, we propose to use two different detectors for different distance ranges. For the close range (up to 5-7m), we present an extremely fast depth-based upper-body detector that allows video-rate system performance on a single CPU core when applied to Kinect sensors. In order to cover also farther distance ranges, we optionally add an appearance-based full-body HOG detector (running on the GPU) that exploits scene geometry to restrict the search space. Our approach can work with both Kinect RGB-D input for indoor settings and with stereo depth input for outdoor scenarios. We quantitatively evaluate our approach on challenging indoor and outdoor sequences and show state-of-the-art performance in a large variety of settings. Our code is publicly available.

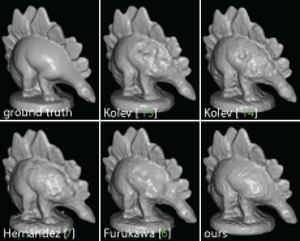

Dense 3D Semantic Mapping of Indoor Scenes from RGB-D Images

Dense semantic segmentation of 3D point clouds is a challenging task. Many approaches deal with 2D semantic segmentation and can obtain impressive results. With the availability of cheap RGB-D sensors the field of indoor semantic segmentation has seen a lot of progress. Still it remains unclear how to deal with 3D semantic segmentation in the best way. We propose a novel 2D-3D label transfer based on Bayesian updates and dense pairwise 3D Conditional Random Fields. This approach allows us to use 2D semantic segmentations to create a consistent 3D semantic reconstruction of indoor scenes. To this end, we also propose a fast 2D semantic segmentation approach based on Randomized Decision Forests. Furthermore, we show that it is not needed to obtain a semantic segmentation for every frame in a sequence in order to create accurate semantic 3D reconstructions. We evaluate our approach on both NYU Depth datasets and show that we can obtain a significant speed-up compared to other methods.

@inproceedings{Hermans14ICRA,

author = {Alexander Hermans and Georgios Floros and Bastian Leibe},

title = {{Dense 3D Semantic Mapping of Indoor Scenes from RGB-D Images}},

booktitle = {International Conference on Robotics and Automation},

year = {2014}

}

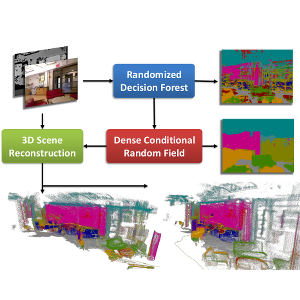

Multiple Target Tracking for Marker-less Augmented Reality

In this work, we implemented an AR framework for planar targets based on the ORB feature-point descriptor. The main components of the framework are a detector, a tracker and a graphical overlay. The detector returns a homography that maps the model- image onto the target in the camera-image. The homography is estimated from a set of feature-point correspondences using the Direct Linear Transform (DLT) algorithm and Levenberg-Marquardt (LM) optimization. The outliers in the set of feature-point correspondences are removed using RANSAC. The tracker is based on the Kalman filter, which applies a consistent dynamic movement on the target. In a hierarchical matching scheme, we extract additional matches from consecutive frames and perspectively transformed model-images, which yields more accurate and jitter-free homography estimations. The graphical overlay computes the six-degree-of-freedom (6DoF) pose from the estimated homography. Finally, to visualize the computed pose, we draw a cube on the surface of the tracked target. In the evaluation part, we analyze the performance of our system by looking at the accuracy of the estimated homography and the ratio of correctly tracked frames. The evaluation is based on the ground truth provided by two datasets. We evaluate most components of the framework under different target movements and lighting conditions. In particular, we proof that our framework is robust against considerable perspective distortion and show the benefit of using the hierarchical matching scheme to minimize jitter and improve accuracy.

A Flexible ASIP Architecture for Connected Components Labeling in Embedded Vision Applications

Real-time identification of connected regions of pixels in large (e.g. FullHD) frames is a mandatory and expensive step in many computer vision applications that are becoming increasingly popular in embedded mobile devices such as smart-phones, tablets and head mounted devices. Standard off-the-shelf embedded processors are not yet able to cope with the performance/flexibility trade-offs required by such applications. Therefore, in this work we present an Application Specific Instruction Set Processor (ASIP) tailored to concurrently execute thresholding, connected components labeling and basic feature extraction of image frames. The proposed architecture is capable to cope with frame complexities ranging from QCIF to FullHD frames with 1 to 4 bytes-per-pixel formats, while achieving an average frame rate of 30 frames-per-second (fps). Synthesis was performed for a standard 65nm CMOS library, obtaining an operating frequency of 350MHz and 2.1mm2 area. Moreover, evaluations were conducted both on typical and synthetic data sets, in order to thoroughly assess the achievable performance. Finally, an entire planar-marker based augmented reality application was developed and simulated for the ASIP.

Universalities in Fundamental Diagrams of Cars, Bicycles and Pedestrians

Since the pioneering work of Greenshields the fundamental diagram is used to characterize and describe the performance of traffic systems. During the last years the discussion and growing data base revealed the influence of human factors, traffic types or ways of measurements on this relation. The manifoldness of influences is important and relevant for applications but moves the discussion away from the main feature characterizing the transport properties of traffic systems. We focus again on the main feature by comparing the fundamental diagram of cars, bicycles and pedestrians moving in a row in a course with periodic boundaries. The underlying data are collected by three experiments, performed under well controlled laboratory conditions. In all experiments the setup in combination with technical equipment or methods of computer vision allowed to determine the trajectories with high precision. The trajectories visualized by space-time diagrams show three different states of motion (free flow state, jammed state and stop-and-go waves) in all these systems. Obviously the values of speed, density and flow of these three systems cover different ranges. However, after a simple rescaling of the velocity by the free speed and of the density by the length of the agents the fundamental diagrams conform regarding the position and height of the capacity. This indicates that the similarities between the systems go deeper than expected and offers the possibility of a universal model for heterogeneous traffic systems.

Universal flow-density relation of single-file bicycle, pedestrian and car motion

The relation between flow and density is an essential quantitative characteristic to describe the efficiency of traffic systems. We have performed experiments with single-file motion of bicycles and compared the results with previous studies for car and pedestrian motion in similar setups. In the space–time diagrams we observe three different states of motion (free flow state, jammed state and stop-and-go waves) in all these systems. Despite their obvious differences they are described by a universal fundamental diagram after proper rescaling of space and time which takes into account the size and free velocity of the three kinds of agents. This indicates that the similarities between the systems go deeper than expected.

Previous Year (2013)