Localize Me

Torsten Sattler, Tobias Weyand, Ming Li, Jan Robert Menzel, Arne Schmitz, Christian Kalla, Pascal Steingrube, Siyu Tang and Bastian Leibe

Summary

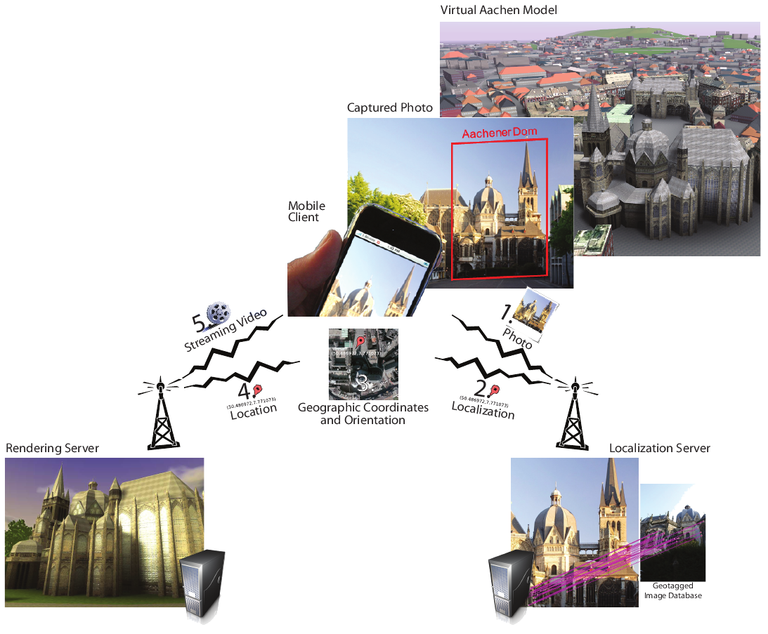

LocalizeMe is an image-based localization system developed in cooperation with the Computer Graphics and Multimedia Group (Prof. Dr. Leif Kobbelt) within the UMIC Cluster of Excellence. Given a query photo a user took with their cell phone, LocalizeMe determines the user's position and transports them into a virtual reconstruction of the corresponding scene, where they can continue their exploration without physical restrictions.

In contrast to conventional localization methods like GPS or cell tower triangulation, image-based localization is more precise and works just as reliably in areas with large buildings, and even indoors. Furthermore, the query image not only allows to localize the user, but also to supply them with information on what they see, like Wikipedia articles on historic buildings, opening hours and user ratings of cafes or restaurants, etc.

System overview

LocalizeMe combines several component technologies. The captured image is transmitted to a central localization server (1) which recognizes the image content and determines the user's current location and orientation (2,3). This information is then used to query a rendering server (4), which visualizes the Virtual Aachen model, a 3D model of the whole city of Aachen, and transmits the resulting video stream back to the mobile device (5), where it is displayed in real-time.

Localization Server

The localization system is based on state-of-the-art image recognition techniques that allow for scalable image-based localization within very short response time (2-3 seconds). The query photo is uploaded to the localization server and matched against a database of thousands of images from the city of Aachen. The closest matching images are then used to determine the position of the user. The underlying image database consists of geotagged panoramic images, similar to the panoramic images used in Google Street View. Since recognition is based on the image data itself, little preprocessing is required making it easy to adapt the recognition server to other cities. This is particularly interesting since Google Street View is available in an increasing number of cities. Because of the space-efficient indexing scheme and sub-linear retrieval techniques the computational requirements for the recognition server are low enough for a normal desktop computer to handle. Furthermore, this pipeline is not limited to image-based localization but can also be used for recognizing landmark buildings and other objects.

Rendering Server

The real time city visualization is based on the Virtual Aachen model developed at the Computer Graphics and Multimedia Group. The user's mobile phone establishes a streaming connection with the rendering server allowing them to freely navigate the scene using touch gestures. In order to save bandwidth and increase responsiveness compared to video streaming, a low-poly 3D model of the current view is transmitted to the user's mobile phone in regular intervals and rendered locally using the mobile phone's GPU.

Contact

For additional information on LocalizeMe please contact [Tobias Weyand] (/person/17/)